Gamesalad and Random Number Generation - Let's Science!

Deviros

Member, PRO Posts: 87

Deviros

Member, PRO Posts: 87

Now - I don't know about y'all but i tend to find that my gamesalad projects that utilize RNG (Random Number Generation) seem to favor a single number, or a range of numbers, over time, usually leaning toward the top end of the spectrum. This is quite frustrating, and I would tend to perceive, even with a random (0,1) generator, that the generator was favoring 1 far more than 0.

Now, as randoms are great for figuring out things like critical chance, damage calculations, and general entropy for realism, having a random number that isn't... random is less than ideal.

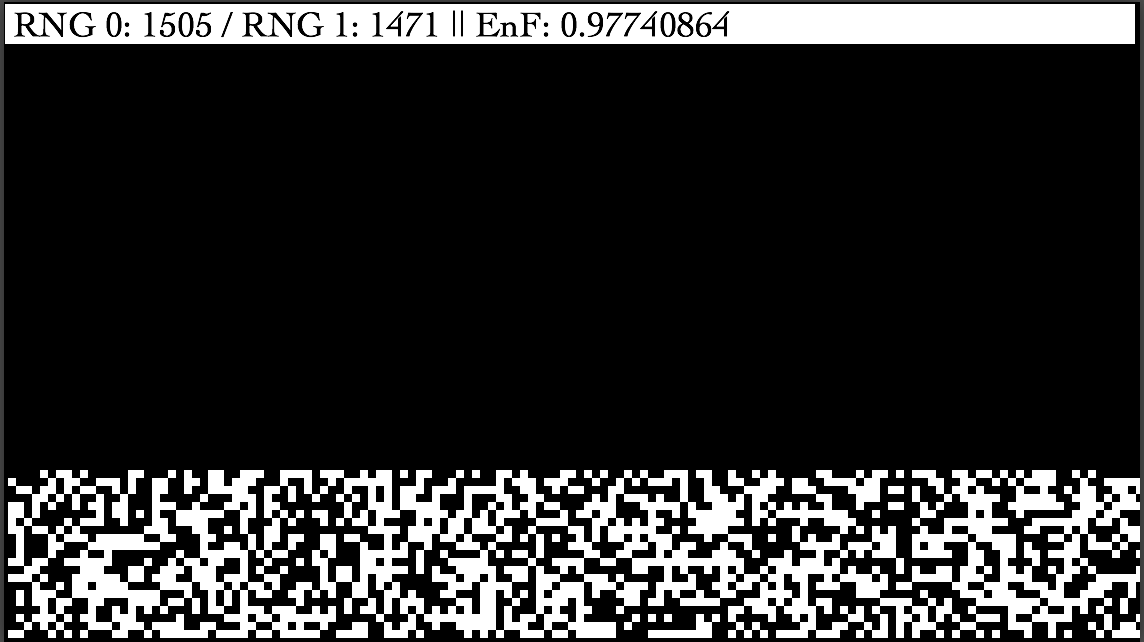

I got to thinking that, instead of trusting my own perception, perhaps, it would be best to see exactly what the PseudoRNG in GS is really capable of! So i built a little application to test the PRNG, and here's the result:

Initially, i used a simple 'random(0,1)' to get a 'yes or no' sample, and as you can see - in that image, we're pretty close to a 1.0 entropic factor - which is actually really good - the white pixels represent 1, and the black represents 0.

Here's a GIF of another run through, in case you're curious as to what it looks like. The low EnF at the beginning is expected, but the longer it runs, the closer it should get to 1, as each number (in this case, 0, and 1) should have equal 'chance' to be called.

(The app doesn't run that fast, though that would be nice =P)

So that's good news - GameSalad's PRNG is actually quite impressively random! I repeated the experiment with floor(random(0,1999)/1000) as well, just to get a wider range of numbers, as well as as "if random(0,1999) > 999" and the factor remained pretty much solidly above .95 the whole time, after the initial spin up.

Finally - here's a link to the application itself if anyone has a desire to poke around with it:

Comments

Cool little test. Always wondered how random the ran function was.

Thanks! Yea - the perceived 'failure' of the RNG was always driving me a little crazy - now i can rest easier when relying on it! =D

Nice test @Deviros, giving peace of mind.

Thank you @Hopscotch!

One other thing I forgot to mention, in regards to the test:

Pure entropy also requires that with a grid map like that, that there are no perceivable patterns in the display - another thing that gs passes on - so that's good!

If anyone does play with the app, please know that I wrote it in about 5 minutes so it's possibly the least optimized application I've ever written - don't judge =P

Here's an example that ran for almost 4000 ticks - it's pretty impressively even, and pattern free:

//

Not!

@Hopscotch

Here's a faster version (a bit thrown together I'm sure you could improve on it) - attached:

I'm not so sure that's true, I can't see what in the maths would demand that there were no perceivable patterns ? In a random system like this any result is permissible.

If I were to see the word 'Hello' written in white on a perfectly black background there would be nothing about that result that should make me suspect it was not the product of a random process.

And also, is there any way of telling whether a process is random or not, I can't imagine any test that could establish something like that ?

http://dilbert.com/strip/2001-10-25

@Hopscotch

https://xkcd.com/221/

@Hopscotch hah! nice 'pattern' identification!

@Socks Well - i meant more like repeating patterns - as in an obviously repeating sequence of pixels over and over:

i.e. something that looks like this:

Not so much the "items in the clouds" type =D

@GeorgeGS Annnnnnd that's why XKCD is the best.

I'd say the same thing, what would it be in the maths that would prevent this repeating sequence of pixels ? If a system is random then an obviously repeating sequence of pixels, over and over, is as likely as any other outcome.

Well sort of. If you've got one full image like that, and only one, sure. But if you ran it hundreds of times and kept getting the same patterns those odds change from being the same as any other, to highly unlikely. And the more it happens, the more unlikely it is.

So as a "at a glance" method of detection with multiple samples, he's not wrong. A preset/partial random vs a random like we have, you'd should be able to see the difference based on that in just a few runs.

I can't see how that would work either !

Let's imagine a perfect chessboard pattern (a perfect arrangement of black / white / black / white / black / white . . . . for all 4000 squares) is produced by your first run of the code.

The chances of this happening are 1/2^4000.

And the chances of any other arrangement of the squares is also 1/2^4000.

Ok, now let's say this perfect chessboard pattern had come up, say, 200 times in a row, what mechanism is at work that would make the chances of the pattern coming up on the 201st iteration less than 1/2^4000 ?

For this to work the system would need to pass information from the last run of the code to the next, but by definition a random system doesn't do that.

Another problem with this idea would be around resetting the system, if you ran the code 200 times and got the perfect chessboard pattern - and then reset the computer, switched it off, left the building and booted it up again the next day, would the idea that because you have had 200 perfect chessboard patterns previously you are less likely to get another one still stand ?

Or put another way, are you able to reset the code to lose the knowledge that the previous 200 iterations produced a perfect chessboard patterns ? Or is this 'memory' permanent, that is to say have you somehow changed to code by getting certain outputs, which means the code will behave differently on further iterations ?

What if you now unplug your computer, buy a new one and have someone else write the same code on this new computer and run that code, would the 'memory' of the previous 200 patterns make its way into this new code on this new machine, or would the odds of any pattern (including the perfect chessboard pattern) be reset to 1/2^4000 ?

If the previous 200 patterns effects the first outcome of this new code, through what ghostly mechanism is it working, if the previous 200 patterns don't effect the first outcome of this new code then what ghostly mechanism is at work whereby the identical code on two identical machines work in different ways (the new machine has a higher chance of producing the perfect chessboard pattern at 1/2^4000 - whereas the old machine has a less than 1/2^4000 chance) !?

Another problem would be that if the more a pattern happens, the more unlikely it is to occur again then running the code through billions and billions of iterations (and billions and trillions of quadrillions . . . ) as patterns came up, their chances of reoccurring would diminish, eventually the system would run out of viable patterns, with every permutation's odds of happening reducing to zero !?

Where would this memory effect be coming from ?

You could reduce this idea from 4000 on/of states to a six sided dice, the translation would be that if you rolled a '6' one hundred times in a row, then not only would the next roll be less likely (less than 1/6) to be a 6, but each time you do get a 6 then the odd reduce even further !?

Again, you could ask the same question, how is the dice remembering what the previous rolls where so it's able to regulate future rolls ?

I want the answers on my desk by 7:30am

I know we are off in the world of useless theorising here but there is simply no way of detecting whether something like this is random or not from looking at the results, even if the squares spelled out "Help me, they've trapped me and turned me into a pixel" 160 times in a row, there would be no reason to suspect the system is not random.

but there is simply no way of detecting whether something like this is random or not from looking at the results, even if the squares spelled out "Help me, they've trapped me and turned me into a pixel" 160 times in a row, there would be no reason to suspect the system is not random.

If I have a ran(0,1) it's a 50% chance of being either 0 or 1 at any given point. But that's not we are looking at.

We are looking at a visual representation of the outcome over time of each random(0,1).

If watch a random number generator, and it tends to land on the number 100, and does this 1 million times in a row, you can say that based on that data, it is very unlikely it's random. So either you have

A statistical anomaly that would not be seen very often at all, like almost never.

It's 99.9%+ not randomized. Or in other words, almost entirely likely it's fixed.

So in the data sets if we see a pattern repeated over and over, the longer it goes the more likely it is to be fixed.

hrmmm ok you have a LOT of density in there, so i'm just gonna kinda ramble through it.

With regards to the chessboard problem - there is a probability, however infinitesimal - that the chessboard would appear 201 times in a row, but it's much akin to the monkeys making shakespear - it's POSSIBLE, sure, but we'd never see it in our lifetime with a truly random sequence. At least - probably not. I suppose if you had an infinite number of super computers with an infinite number of monitors... =P

With a truly random system, the odds of any number coming up at a given time is NEVER affected by the previous occurrence of that number - i.e. rolling a 6 six times in a row with an unweighted die on a perfect surface, with the same exact roll, etc, etc, etc would not cause the die to have any less of a chance to roll a six again.

When it comes to repeating patterns - you have the odds of the pattern itself occurring, and with every iteration of that pattern, it's really just a BIGGER pattern that you're computing the odds for, not the repetition of the previous pattern!

Let's pretend that you're looking for the pattern 1,3,5,5,4. For the sake of argument, that pattern has odds of 1:2000. Now, you want that pattern to repeat twice, and sure, each instance of that pattern has a 1:2000 chance of occurring, but you're REALLY looking for the pattern 1,3,5,5,4,1,3,5,5,4 - and you need to compute the odds of that larger pattern itself occurring, not the two individual patterns individually.

So - it's not about the memory of the dice, it's about the odds of the dice running that much larger pattern!

Agreed, we are looking at the result of 4000 individual randomised selections.

I'm not so sure, nor do I think that because you've had the number 100 one million times in a row that is it now less likely to appear, if this were the case we could increase our odds of winning the lottery by analysing previous outcomes.

I think we are just recognising a pattern, there is nothing fundamentally different in a random system of pixels between a perfect rendering of Bart Simpson and a 'random jumble of pixels', so of course both are as likely to occur - and by definition a random system doesn't factor in previous itterations, so the likelihood of both Bart Simpson or a 'random jumble of pixels' coming up on the next itteration doesn't change, so we shouldn't be any more surprised to see Bart 17 times in a row than we should if we saw a jumble of pixels (of course our brains won't allow us to simply ignore it !), in that regard it's not really a statistical anomaly, it's no more anomalous than any other arrangement of pixels coming up in any other sequential order, even repeating patterns.

But it would be impossible to know from simply looking at the results.

You cant "know" but you can approach it statistically and looks at the odds. I mean how can you know anything for that matter? Right now if i asked you if the moon exists, you'd probably say yes. But in any given moment, that might not be true.

But over time, if we see the moon day in an out, and we see it's patterns, it's collisions with meteorites, etc. and we know approximately how old it is and can guess how long it might last up to a point. That is barring any 0.000000000000000000000000001% anomalies that wipe it out entirely. That would suck...

Sure, we can't know anything with absolute inerrancy, but within the confines of a fixed or closed system that we define (i.e. the production 4000 randomised on/off results) we can say some things for certain, for example, by definition a randomised system will produce a random result, if we were to add in the caveat 'but the result of all the previous iterations needs to be taken into account and allowed to modify the next iteration' then the system is not, by definition, random, on the other hand if the process is allowed to run without factoring in the previous results (is truly random) then the idea that as a result is returned it reduces the chances of that same result occurring again must be mistaken.

Problem of induction !

I never liked the moon anyhow, when I'm out trying to commit unspeakable crimes, it's there, watching me.

It's as probable (chessboard pattern, 201 times in a row) as any other sequence of 201 arrangements of 4,000 pixels, yes it's a small probability, but no smaller (or larger) than another arrangement.

Yes, but that is true of all arrangements (sequences) of all patterns.

Ok, if there are 5 numbers (1-5) the odds of 1,3,5,5,4 being chosen (in that order) are 1/3,125.

The chances of getting 1,3,5,5,4 twice in a row is 1/9,765,625.

Which is . . . . . just like any other arrangement of 5 numbers (max value = 5) occurring twice ! . . . Or any other arrangement of 5 numbers happening after any other arrangement of 5 numbers (i.e. not duplicating the same result), so the chances of 1,3,5,5,4 - 1,3,5,5,4 is the same as 2,2,2,2,2 - 2,2,2,2,2 . . . . but also the same as 2,3,1,1,5 - 5,2,4,1,4.

Which is kind of my point, we shouldn't be surprised to see a pattern repeat 100 times (but of course we would find it difficult not to see meaning or patterning), if we did see 1,3,5,5,4 come up 1 million times in a row, there would be nothing about that to indicate that the system producing those results was not random.

I get very frustrated by the way GameSalad handles random numbers. Since it is presumably using Lua to generate the random numbers, we're presumably getting random numbers seeded by OS time, and seeded by the second not millisecond. This means if I'm previewing a project and hit refresh a lot, I'll see the same results crop up often. This is less of an issue in-game, of course, but annoys me regularly when testing

I made a project a while back that did something similar with random(1,5) (nowhere near as cool as this though), and it came to a similar conclusion as @Deviros' project. Attached is the result of 1 million iterations of random(1,5). There is a difference of just over 500 between the most frequently occurring number and the least frequently occurring number.

There's some interesting discussion regarding Lua and random numbers. And it seems to be more of an issue on OS X than on Windows.

So I guess the question is, "If a tree falls in the forest and nobody is there, does it still make a sound?" If to the user, it is past their mental capacity to calculate the possible patterns then is it random?

... That's actually a good question. I would expect that would be 'functionally random' - which is, honestly, good enough!

I mean - unless you were doing calculations of extreme importance for some sciency thing, then that metric - 'beyond the capacity to identify patterns' - should be more than adequate to determine randomness.